Beyond Shannon—The Structure and Meaning of Information

6-8 May 2019

Beyond Shannon—The Structure and Meaning of Information

Institute for Advanced Study, University of Amsterdam

Co-Organizers: Peter Sloot (IAS), James P Crutchfield (CSC, UC Davis), Rick Quax (IAS), Ryan G James (CSC, UC Davis), Jeffrey Emenheiser (CSC, UC Davis)

Background & Goals

“When you use the word information, you should rather use the word form.” Réne Thom

A complete, interpretable, and operational decomposition of information has remained elusive despite nearly 70 years of effort. Recent significant progress has been made by narrowing the scope: How can two variables influence a third? And, expanding upon this, how can many variables influence another? Taking a broader view, much of successful empirical science turns on determining what the informational “modes of dependency’ of a joint distribution’s variables can exhibit. Once these modes have been identified, the task of quantifying them remains. Answering these questions is crucial for better understanding complex systems in particular, since they are thought of generating highly intricate informational structures. Falling short in these challenges is a fundamental failing: We understand neither what a complex system is nor what complexity is.

Why does such an informational decomposition not yet exist, in spite of significant interest and much effort over decades? One challenge is that there is little guiding theory describing the possible kinds of nonlinear, multivariate interactions. Another is that the methods and measures of information theory, once extended to the multivariate setting, no longer possess the operational meaning that defined their bivariate forms. This has led to the unfortunate situation where incorrect or misleading meanings and structural interpretations have been associated with informational measures. This, in turn, effectively eclipsed a focused development of measures that answer the correlational, structural, and causal questions asked in science.

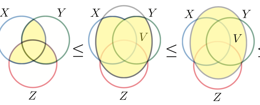

One promising framework making inroads in these challenges is the partial information decomposition (PID). It posits that the information two sources (X0 and X1) share with a target (Y) decomposes into four components: that which X0 and X1 redundantly share with Y, that which X0 uniquely shares with Y, that which X1 uniquely shares with Y, and finally what X0 and X1 synergistically share with Y. Despite much recent effort, though, little agreement has emerged as to how the components should be quantified.

Moreover, for more than two sources, while the original partial information decomposition identified an algebraic lattice of multivariate dependencies into which the mutual information should be decomposed, there is now a growing consensus that this lattice is incorrect. How the lattice needs to be modified remains unknown. That is, the very architecture of statistical dependency requires rethinking.

Finally, if the PID partitioning of variables into sources and target is inappropriate for the system under study, currently there is little understanding as to how shared information should be decomposed. More concretely, what are the elements to be quantified and upon what scaffold of relationships are they to be placed? Should information be associated with each variable? All subsets of variables? Antichains of variables? Antichain covers? Each of these admits at least one lattice onto which information can be affixed.

That said, how do we quantify these elements? Generally, the alternative lattices contain more elements than there are standard Shannon information measures, necessitating that we look beyond Shannon to quantify them.

These fundamental questions motivate calling for a workshop to go beyond Shannon communication theory, reaching out to experts from a range of disciplines to come together to take stock of the challenges and to innovate new approaches.