Revealing order in the chaos

- 26 February 2005

- From New Scientist Print Edition. Subscribe and get 4 free issues.

- Mark Buchanan

- Mark Buchanan's latest book is Small World, published by Weidenfeld & Nicolson, 2003

WHEN it comes to predicting significant things, you could say that when we're good, we're very very good, and when we're bad, we're useless. We can spot weaknesses in an aircraft wing long before it fails, and foretell an eclipse centuries in advance. Yet we seem totally powerless to predict other events - annoying jam-ups in a manufacturing line, cascading power failures, earthquakes or financial crises. Why?

For two decades, researchers have suspected that what makes such events so unpredictable is their inherent complexity. In the Earth's crust and its ecosystems, and in any economy, events depend on the delicate interactions of millions of parts, and seemingly insignificant accidents can sometimes have massive repercussions. Mathematicians have even declared that some complex systems are "computationally irreducible", meaning there is no short cut to knowing their future. The only way to find out what will happen is to actually let it happen.

But it now seems that this conclusion may have been unduly pessimistic. Revisiting the mathematics behind this topic, researchers have discovered that if you ask the right kinds of questions, even computationally irreducible systems can be more predictable than anyone thought. So foretelling events like financial meltdowns and earthquakes might just be possible after all. Even better, this new perspective could help to answer some of the deepest questions of science.

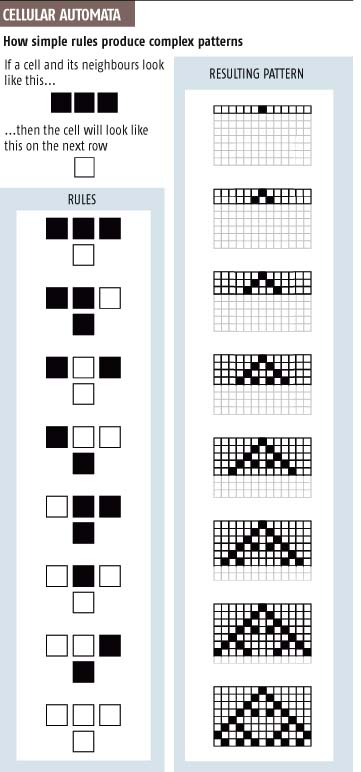

Much of this breakthrough has come from research into computer programs known as cellular automata. The simplest kind of cellular automaton is a row of cells - each of which can be, say, black or white - along with a set of rules that determine how each cell's colour will change from one row to the next

In 1984 mathematician Stephen Wolfram published an exhaustive study of the 256 rules to see what he could learn from them. He found that some led to quite simple behaviour, with the system quickly falling into a static or periodically repeating pattern. Many others, however, generated highly complex and apparently random patterns. Wolfram's analysis led him to suggest that automata in this latter class were computationally irreducible, and subsequent work by others even proved this for one specific automaton - that corresponding to "rule 110".

It was a blow to scientists trying to get a handle on complex systems. Far from being an obscure mathematical plaything, cellular automata embody the very essence of physics and engineering. In these systems, influences pass from one point to neighbouring points, just as in real physical processes. Indeed, when researchers simulate physical systems on computers, the equations they use are often based on cellular automata.

Wolfram suggested that the computational irreducibility he found in certain cellular automata might also be commonplace in the more complex systems of the real world: it might just explain why so many events, from earthquakes to ecological upheavals, prove hard to predict. Our frustration, he concluded, could be rooted in the very principles of mathematics.

Fortunately, however, the story doesn't end there. Physicist Nigel Goldenfeld of the University of Illinois at Urbana-Champaign thinks there's a way out. Goldenfeld studies pattern formation in structures as diverse as snowflakes and limestone deposits at geothermal hot springs. During two decades of research, he has reached the view that the best way to study patterns is through "coarse-grained" models: that is, models that leave out most of the details and focus only on the broad-brush description of the pattern-forming process. He and his colleagues have found that completely different situations can have precisely the same logic. The convection patterns produced in a pan of boiling water, for instance, are uncannily similar to the patterns in a shaken tray of sand. Goldenfeld's team has developed mathematical ideas to explain why this might be. "Because of this work," says Goldenfeld, "I became interested in what would happen if the same ideas were applied to cellular automata."

Deceptively simple

So late last year, he and colleague Navot Israeli, also at Illinois, began exploring the possibility that some cellular automata might actually be simpler than they appeared - even those thought to be computationally irreducible. Perhaps you just had to know which details to ignore and then adopt the appropriate "coarse-grained" perspective. To find out, the pair repeated Wolfram's exhaustive study of the 256 automata. "We hoped to find a few cases where this would work," Israeli says. And they did (Physical Review Letters, vol 92, p 74105).

In their scheme, they group the cells of the original system together in "supercells" comprising, say, 8 or 10 cells. Each supercell then corresponds to one cell of a new coarse-grained system, and its state is defined through some scheme; it might be black or white, for example, depending on whether it contains more black or white cells.

There are, obviously, many ways to define a coarse graining - choosing groups of different sizes, and using different schemes for determining the states of the new cells. But in general, many specific patterns of cells - each a specific state of the original cellular automata - will correspond to just one state in the new. With supercells of 10 cells each, for example, literally thousands of distinct patterns have more black cells than white - all would give a single black supercell in the coarse-grained system. The coarse-graining also modifies the time-step between applications of the rule that is then applied to the coarse-grained system, effectively skipping through some of the states.

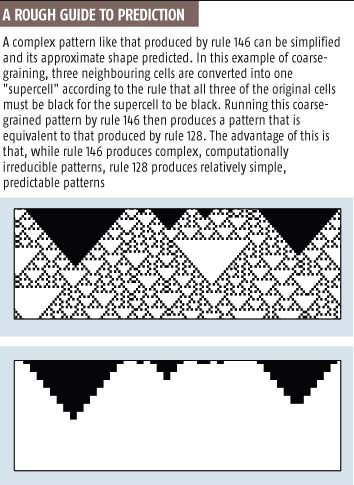

For each of Wolfram's 256 cellular automata, Goldenfeld and Israeli explored the consequences of a large number of possible coarse grainings. They then ran their coarse-grained pattern by the original rule. This produced a different pattern from the original. In 240 out of 256 of these cases, rules that produced relatively simple and predictable patterns mimicked the rough behaviour of rules that produce complex, computationally irreducible patterns. They had found a way to make unpredictable outcomes at least roughly predictable.

Most surprisingly, this was even possible for automata that are known to be computationally irreducible, such as the infamous rule 110. In every case where the coarse-graining worked, it produced a simpler system that reproduced the large-scale dynamics of the original.

"This is a crowning achievement," says physicist Didier Sornette of the University of California, Los Angeles. And it suggests that the situation with complex systems may not be so bleak after all: prediction may simply depend on descriptions at the right level of detail.

But of course, that's half the problem: while coarse-graining's success in basic models like this can give researchers hope, it doesn't tell them how to simplify messy real-world systems. However, Sornette and other researchers are making progress in this area, almost by trial and error, and they have had striking success even in some of the most difficult circumstances.

Roughing it

Two years ago, physicist Neil Johnson of the University of Oxford and his colleagues pioneered a coarse-grained model of real financial markets (New Scientist, 10 April 2004, p 34). They found it was remarkably successful at forecasting the foreign exchange market, predicting the market's daily ups and downs with an accuracy of more than 54 per cent. Getting it right that often can outweigh the transaction costs of trading and turn a profit.

Now Sornette and Jørgen Andersen of the University of Paris X have managed to pin down why Johnson's coarse-graining model is so effective, and in the process they have discovered a surprisingly simple way to show that markets should be especially easy to predict at certain times

Perhaps most boldly, physicist Jim Crutchfield of the Santa Fe Institute in New Mexico has devised a scheme that he believes could predict links between the past and future for virtually any system. Any physical process is a sequence of events - whether it is water flowing down a stream or a colony of bacteria infecting a wound - and prediction means mapping past histories of events onto possible future outcomes. In the late 1980s, Crutchfield began arguing that you could sort the various histories of a system into classes, so that all the histories in each class give the same outcome. Then, as with Goldenfeld and Israeli's coarse-grainings, many details of the underlying system might be irrelevant, making it possible to simplify the description and maybe finding a route to prediction.

For 15 years, Crutchfield and colleagues have been seeking mathematical procedures for doing this automatically, a process they call "computational mechanics". They have successfully applied the approach to a number of practical applications, helping to clarify the chaotic dynamics of dripping taps and identify hidden patterns in the molecular disorder of many real materials (New Scientist, 29 August 1998, p 36). "We've shown that there is a kind of order in the chaos," says Crutchfield.

The coarse-graining approach might even settle some long-standing scientific puzzles, Crutchfield suggests. After all, when it comes to knowledge, less is sometimes more. "This is what scientists do in their work - they try to strip irrelevant details away and gather histories into equivalent groups, thereby making theories as simple as possible," he says. Goldenfeld agrees: "In physics we only ever ask for approximate answers. I'm pretty sure that this is why physics works at all, and isn't hampered by computational irreducibility."

Only by ignoring vast amounts of molecular detail did researchers ever develop the laws of thermodynamics, fluid dynamics and chemistry. But it could go even deeper, Goldenfeld suggests. He thinks that coarse-graining could even have something to do with the laws of physics themselves. "The dream," Goldenfeld says, "is that as long as you look at long enough scales of space and time, you will inevitably observe processes that fit in with relativity, quantum mechanics and so on."

There is a new optimism among those in the business of prediction. The prospects for forecasting major events in ecology and economics - and maybe even earthquakes and cancers - suddenly look less bleak. Where we once felt powerless in the face of overwhelming complexity, there is now hope of seizing control. Coarse-graining might never give us a crystal-clear window on the future, but it might just make it clear enough.

Prediction days

In financial markets there are only two kinds of strategies: those that depend on the immediate past and those that do not. Though this might seem a banal insight, it has enabled Jørgen Andersen of the University of Paris X and Didier Sornette of the University of California, Los Angeles, to begin predicting these markets. It suggests that real markets might sometimes lock themselves into certain futures. For example, if more than half the population came to be using strategies that disregard the immediate past, then the future would be certain as soon as the ideas behind these strategies became clear.

Such events would be extremely unlikely if players chose their strategies at random, but real people don't. Instead, they tend to use ideas that have done well recently, and end up acting alike. In a computer simulation of a simplified market, Sornette and Anderson found that this phenomenon turned 17 per cent of the days into "prediction days", when the markets were at their most readable.

Moving to real markets, the pair used data for the NASDAQ over 60 days to train a computer model to recognise upcoming prediction days. They then used the model's output to make predictions of the NASDAQ ahead of time. Although the prediction days come with varying levels of statistical certainty, meaning some give more reliable predictions than others, over the next 10 weeks the researchers identified 10 prediction days with a relatively high certainty, and their predictions on these days turned out to be 70 per cent accurate. And three prediction days had very high certainty - all predictions on these proved correct.

The growing success of the coarse-graining approach suggests it could eventually tame a vast range of unpredictable systems, from consumer markets to the complex biological processes that underlie human diseases. A number of physicians believe these ideas might be applicable to diseases such as cancer, which ultimately come down to the "cheating" behaviour of rogue cells. By understanding how the diverse strategies of different cells can have collective consequences, it might be possible to design low-impact treatments that target just a few crucial cells to steer the system away from disease.