an Article from SCIENTIFIC AMERICAN

DECEMBER, 1986 VOL. 254 NO. 12, 46-57.

Chaos

There is order in chaos: randomness has an underlying geometric form. Chaos imposes fundamental limits on prediction, but it also suggests causal relationships where none were previously suspected

by James P. Crutchfield, J. Doyne Farmer, Norman H. Packard, and Robert S. Shaw

The great power of science lies in the ability to relate cause and effect. On the basis of the laws of gravitation, for example, eclipses can be predicted thousands of years in advance. There are other natural phenomena that are not as predictable. Although the movements of the atmosphere obey the laws of physics just as much as the movements of the planets do, weather forecasts are still stated in terms of probabilities. The weather, the flow of a mountain stream, the roll of the dice all have unpredictable aspects. Since there is no clear relation between cause and effect, such phenomena are said to have random elements. Yet until recently there was little reason to doubt that precise predictability could in principle be achieved. It was assumed that it was only necessary to gather and process a sufficient amount of information.

Such a viewpoint has been altered by a striking discovery: simple deterministic systems with only a few elements can generate random behavior. The randomness is fundamental; gathering more information does not make it go away. Randomness generated in this way has come to be called chaos.

A seeming paradox is that chaos is deterministic, generated by fixed rules that do not themselves involve any elements of chance. In principle the future is completely determined by the past, but in practice small uncertainties are amplified, so that even though the behavior is predictable in the short term, it is unpredictable in the long term. There is order in chaos: underlying chaotic behavior there are elegant geometric forms that create randomness in the same way as a card dealer shuffles a deck of cards or a blender mixes cake batter.

The discovery of chaos has created a new paradigm in scientific modeling. On one hand, it implies new fundamental limits on the ability to make predictions. On the other hand, the determinism inherent in chaos implies that many random phenomena are more predictable than had been thought. Random-looking information gathered in the past—and shelved because it was assumed to be too complicated—can now be explained in terms of simple laws. Chaos allows order to be found in such diverse systems as the atmosphere, dripping faucets, and the heart. The result is a revolution that is affecting many different branches of science.

What are the origins of random behavior? Brownian motion provides a classic example of randomness. A speck of dust observed through a microscope is seen to move in a continuous and erratic jiggle. This is owing to the bombardment of the dust particle by the surrounding water molecules in thermal motion. Because the water molecules are unseen and exist in great number, the detailed motion of the dust particle is thoroughly unpredictable. Here the web of causal influences among the subunits can become so tangled that the resulting pattern of behavior becomes quite random.

The chaos to be discussed here requires no large number of subunits or unseen influences. The existence of random behavior in very simple systems motivates a reexamination of the sources of randomness even in large systems such as weather.

What makes the motion of the atmosphere so much harder to anticipate than the motion of the solar system? Both are made up of many parts, and both are governed by Newton's second law, F = m a, which can be viewed as a simple prescription for predicting the future. If the forces F acting on a given mass m are known, then so is the acceleration a. It then follows from the rules of calculus that if the position and velocity of an object can be measured at a given instant, they are determined forever. This is such a powerful idea that the 18th-century French mathematician Pierre Simon de Laplace once boasted that given the position and velocity of every particle in the universe, he could predict the future for the rest of time. Although there are several obvious practical difficulties to achieving Laplace's goal, for more than 100 years there seemed to be no reason for his not being right, at least in principle. The literal application of Laplace's dictum to human behavior led to the philosophical conclusion that human behavior as completely predetermined: free will did not exist.

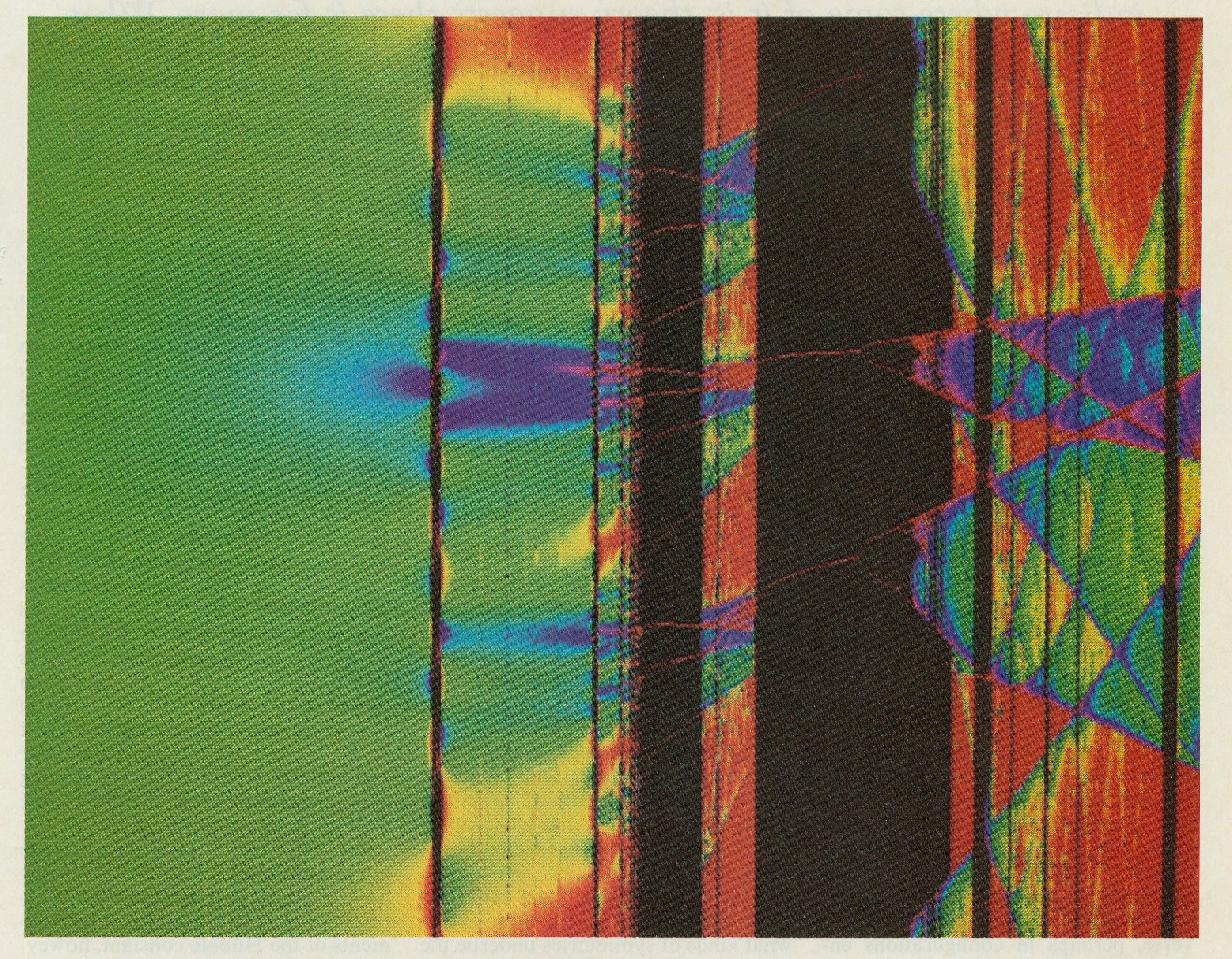

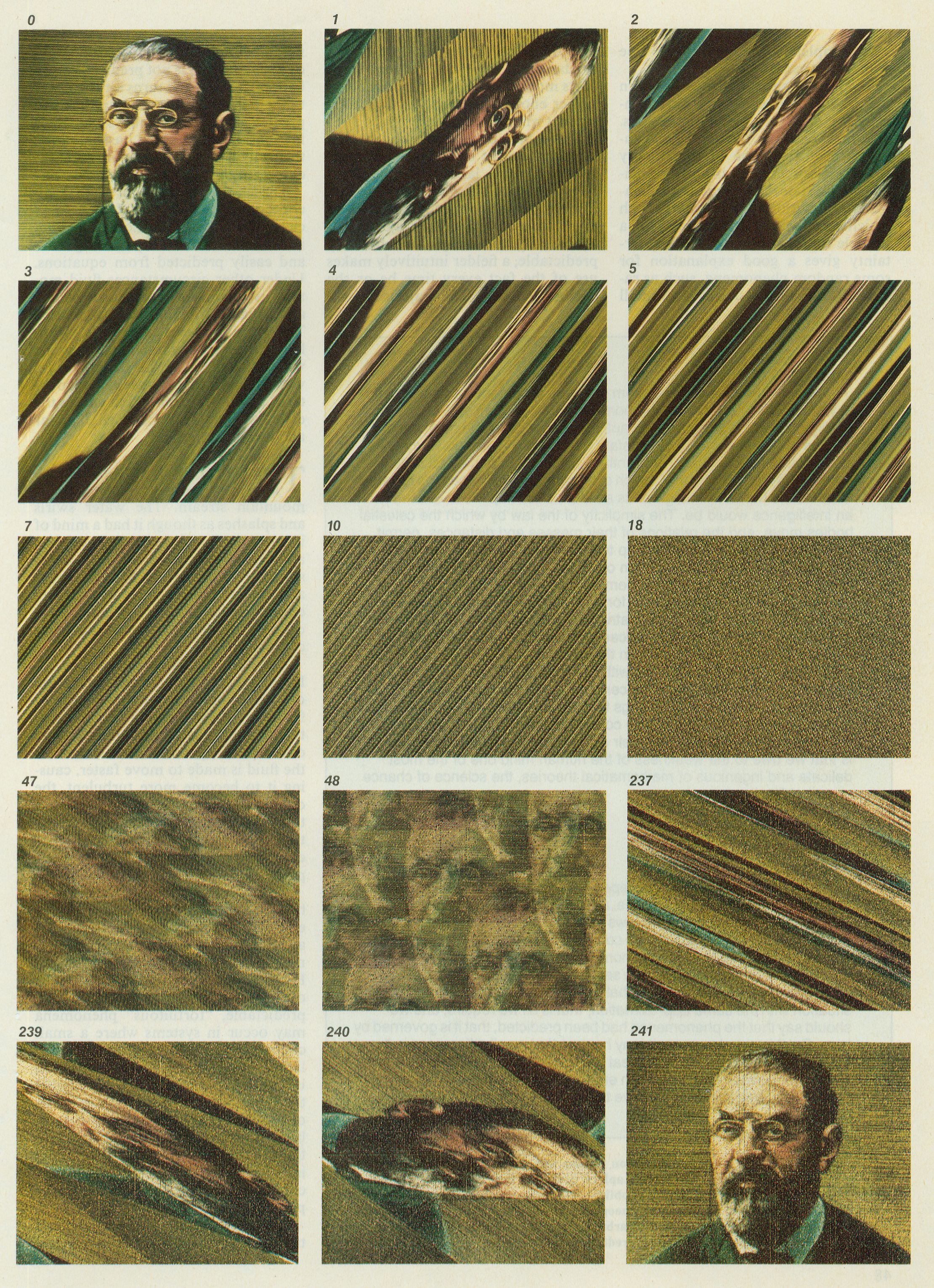

CHAOS results from the geometric operation of stretching. The effect is illustrated for a painting of the French mathematician Henri Poincaré, the originator of dynamical systems theory. The initial image (top left) was digitized so that a computer could perform the stretching operation. A simple mathematical transformation stretches the image diagonally as though it were painted on a sheet of rubber. Where the sheet leaves the box it is cut and reinserted on the other side, as is shown in panel 1. (The number above each panel indicates how many times the transformation has been made.) Applying the transformation repeatedly has the effect of scrambling the face (panels 2-4). The net effect is a random combination of colors, producing a homogeneous field of green (panels 10 and 18). Sometimes it happens that some of the points come back near their initial locations, causing a brief appearance of the original image (panels 47-48, 239-241). The transformation shown here is special in that the phenomenon of “Poincaré recurrence” (as it is called in statistical mechanics) happens much more often than usual; in a typical chaotic transformation recurrence is exceedingly rare, occurring perhaps only once in the lifetime of the universe. In the presence of any amount of background fluctuations the time between recurrences is usually so long that all information about the original image is lost.

Twentieth-century science has seen the downfall of Laplacian determinism, for two very different reasons. The first reason is quantum mechanics. A central dogma of that theory is the Heisenberg uncertainty principle, which states that there is a fundamental limitation to the accuracy with which the position and velocity of a particle can be measured. Such uncertainty gives a good explanation for some random phenomena, such as radioactive decay. A nucleus is so small that the uncertainty principle puts a fundamental limit on the knowledge of its motion, and so it is impossible to gather enough information to predict when it will disintegrate.

The source of unpredictability on a large scale must be sought elsewhere, however. Some large-scale phenomena arc predictable and others are not. The distinction has nothing to do with quantum mechanics. The trajectory of a baseball, for example, is inherently predictable; a fielder intuitively makes use of the fact every time he or she catches the ball. The trajectory of a flying balloon with the air rushing out of it, in contrast, is not predictable; the balloon lurches and turns erratically at times and places that are impossible to predict. The balloon obeys Newton's laws just as much as the baseball does; then why is its behavior so much harder to predict than that of the ball?

OUTLOOKS OF TWO LUMINARIES on chance and probability are contrasted. The French mathematician Pierre Simon de Laplace proposed that the laws of nature imply strict determinism and complete predictability, although imperfections in observations make the introduction of probabilistic theory necessary. The quotation from Poincaré foreshadows the contemporary view that arbitrarily small uncertainties in the state of a system may be amplified in time and so predictions of the distant future cannot he made.

Laplace, 1776

“The present state of the system of nature is evidently a consequence of what it was in the preceding moment, and if we conceive of an intelligence which at a given instant comprehends all the relations of the entities of this universe, it could state the respective positions, motions, and general affects of all these entities at any time in the past or future.

“Physical astronomy, the branch of knowledge which does the greatest honor to the human mind, gives us an idea, albeit imperfect, of what such an intelligence would be. The simplicity of the law by which the celestial bodies move, and the relations of their masses and distances, permit analysis to follow their motions up to a certain point; and in order to determine the state of the system of these great bodies in past or future centuries, it suffices for the mathematician that their position and their velocity be given by observation for any moment in time. Man owes that advantage to the power of the instrument he employs, and to the small number of relations that it embraces in its calculations. But ignorance of the different causes involved in the production of events, as well as their complexity, taken together with the imperfection of analysis, prevents our reaching the same certainty about the vast majority of phenomena. Thus there are things that are uncertain for us, things more or less probable, and we seek to compensate for the impossibility of knowing them by determining their different degrees of likelihood. So it is that we owe to the weakness of the human mind one of the most delicate and ingenious of mathematical theories, the science of chance or probability.”

Poincaré, 1903

“A very small cause which escapes our notice determines a considerable effect that we cannot fail to see, and then we say that the effect is due to chance. If we knew exactly the laws of nature and the situation of the universe at the initial moment, we could predict exactly the situation of that same universe at a succeeding moment. But even if it were the case that the natural laws had no longer any secret for us, we could still only know the initial situation approximately. If that enabled us to predict the succeeding situation with the same approximation, that is all we require, and we should say that the phenomenon had been predicted, that it is governed by laws. But it is not always so; it may happen that small differences in the initial conditions produce very great ones in the final phenomena. A small error in the former will produce an enormous error in the latter. Prediction becomes impossible, and we have the fortuitous phenomenon.”

The classic example of such a dichotomy is fluid motion. Under some circumstances the motion of a fluid is laminar—even, steady, and regular— and easily predicted from equations. Under other circumstances fluid motion is turbulent—uneven, unsteady, and irregular—and difficult to predict. The transition from laminar to turbulent behavior is familiar to anyone who has been in an airplane in calm weather and then suddenly encountered a thunderstorm. What causes the essential difference between laminar and turbulent motion?

To understand fully why that is such a riddle, imagine sitting by a mountain stream. The water swirls and splashes as though it had a mind of its own, moving first one way and then another. Nevertheless, the rocks in the stream bed are firmly fixed in place, and the tributaries enter at a nearly constant rate of flow. Where, then, does the random motion of the water come from?

The late Soviet physicist Lev D. Landau is credited with an explanation of random fluid motion that held sway for many years, namely that the motion of a turbulent fluid contains many different, independent oscillations. As the fluid is made to move faster, causing it to become more turbulent, the oscillations enter the motion one at a time. Although each separate oscillation may be simple, the complicated combined motion renders the flow impossible to predict.

Landau's theory has been disproved, however. Random behavior occurs even in very simple systems, without any need for complication or indeterminacy. The French mathematician Henri Poincaré realized this at the turn of the century when he noted that unpredictable, “fortuitous” phenomena may occur in systems where a small change in the present causes a much larger change in the future. The notion is clear if one thinks of a rock poised at the top of a hill. A tiny push one way or another is enough to send it tumbling down widely differing paths. Although the rock is sensitive to small influences only at the top of the hill, chaotic systems are sensitive at every point in their motion.

A simple example serves to illustrate just how sensitive some physical systems can be to external influences. Imagine a game of billiards, somewhat idealized so that the balls move across the table and collide with a negligible loss of energy. With a single shot the billiard player sends the collection of balls into a protracted sequence of collisions. The player naturally wants to know the effects of the shot. For how long could a player with perfect control over his or her stroke predict the cue ball's trajectory? If the player ignored an effect even as minuscule as the gravitational attraction of an electron at the edge of the galaxy, the prediction would become wrong after one minute!

The large growth in uncertainty comes about because the balls are curved, and small differences at the point of impact are amplified with each collision. The amplification is exponential: it is compounded at every collision, like the successive reproduction of bacteria with unlimited space and food. Any effect, no mailer how small, quickly reaches macroscopic proportions. That is one of the basic properties of chaos.

It is the exponential amplification of errors due to chaotic dynamics that provides the second reason for Laplace's undoing. Quantum mechanics implies that initial measurements are always uncertain, and chaos ensures that the uncertainties will quickly overwhelm the ability to make predictions. Without chaos Laplace might have hoped that errors would remain bounded, or at least grow slowly enough to allow him to make predictions over a long period. With chaos, predictions are rapidly doomed to gross inaccuracy.

The larger framework that chaos emerges from is the so-called theory of dynamical systems. A dynamical system consists of two parts: the notions of a state (the essential information about a system) and a dynamic (a rule that describes how the state evolves with time). The evolution can be visualized in a state space, an abstract construct whose coordinates are the components of the state. In general the coordinates of the state space vary with the context; for a mechanical system they might be position and velocity, but for an ecological model they might be the populations of different species.

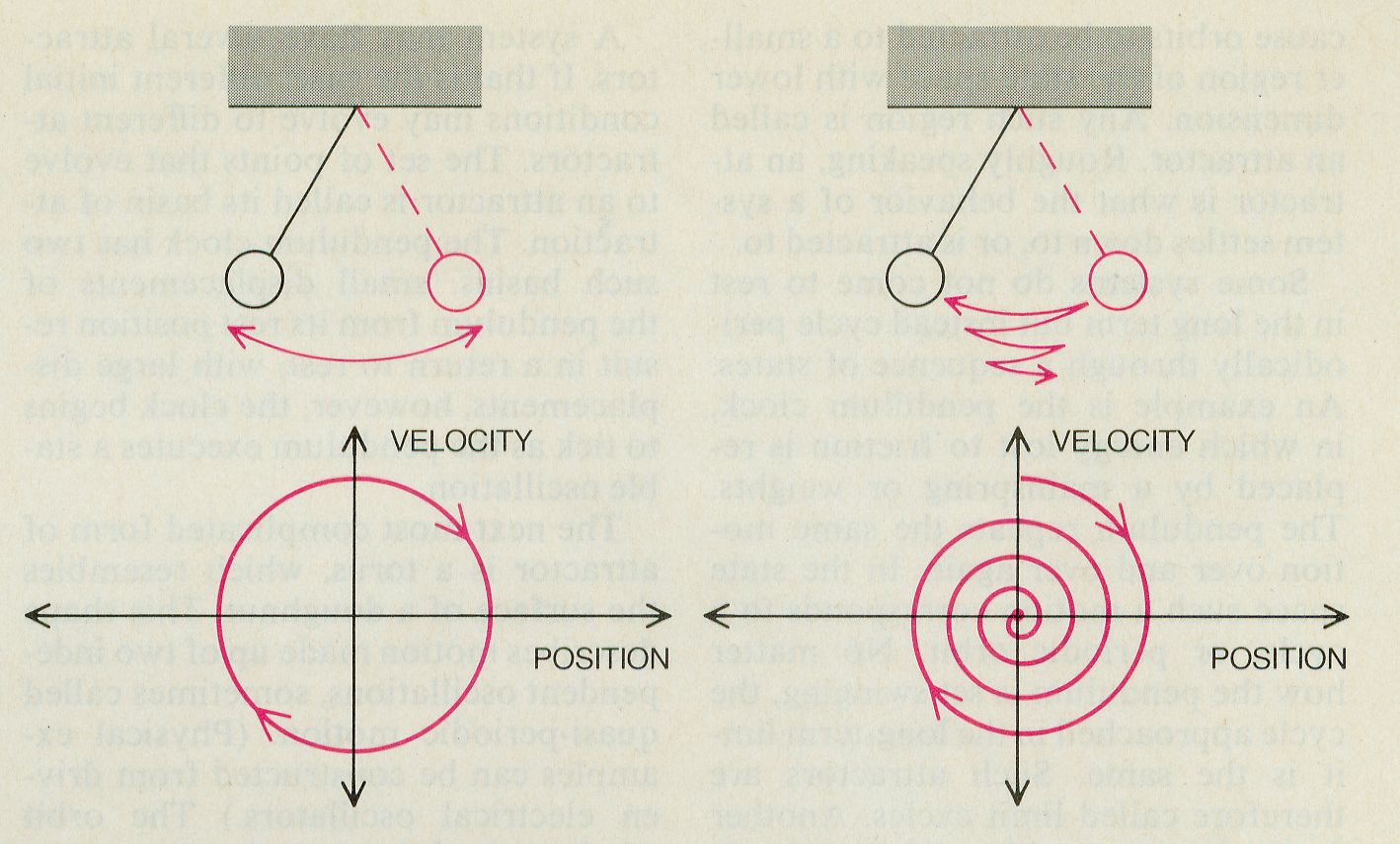

A good example of a dynamical system is found in the simple pendulum. All that is needed to determine its motion are two variables: position and velocity. The state is thus a point in a plane, whose coordinates are position and velocity. Newton's laws provide a rule, expressed mathematically as a differential equation, that describes how the state evolves. As the pendulum swings back and forth the state moves along an “orbit”, or path, in the plane. In the ideal case of a frictionless pendulum the orbit is a loop; failing that, the orbit spirals to a point as the pendulum comes to rest.

STATE SPACE is a useful concept for visualizing the behavior of a dynamical system. It is an abstract space whose coordinates are the degrees of freedom of the system's motion. The motion of a pendulum (top), for example, is completely determined by its initial position and velocity. Its state is thus a point in a plane whose coordinates are position and velocity (bottom). As the pendulum swings hack and forth it follows an “orbit”, or path, through the state space. For an ideal, frictionless pendulum the orbit is a closed curve (bottom left); otherwise, with friction, the orbit spirals to a point (bottom right).

A dynamical system's temporal evolution may happen in either continuous time or in discrete time. The former is called a flow, the latter a mapping. A pendulum moves continuously from one state to another, and so it is described by a continuous-time flow. The number of insects born each year in a specific area and the time interval between drops from a dripping faucet are more naturally described by a discrete-time mapping.

To find how a system evolves from a given initial state one can employ the dynamic (equations of motion) to move incrementally along an orbit. This method of deducing the system's behavior requires computational effort proportional to the desired length of time to follow the orbit. For simple systems such as a frictionless pendulum the equations of motion may occasionally have a closed-form solution, which is a formula that expresses any future state in terms of the initial state. A closed-form solution provides a short cut, a simpler algorithm that needs only the initial state and the final time to predict the future without stepping through intermediate states. With such a solution the algorithmic effort required to follow the motion of the system is roughly independent of the time desired. Given the equations of planetary and lunar motion and the earth's and moon's positions and velocities, for instance, eclipses may be predicted years in advance.

Success in finding closed-form solutions for a variety of simple systems during the early development of physics led to the hope that such solutions exist for any mechanical system. Unfortunately, it is now known that this is not true in general. The unpredictable behavior of chaotic dynamical systems cannot be expressed in a closed-form solution. Consequently there are no possible short cuts to predicting their behavior.

The state space nonetheless provides

a powerful tool for describing the behavior of

chaotic systems. The usefulness of the

state-space picture lies in its ability to represent behavior in

geometric form. For example, a pendulum that

moves with friction eventually comes to a halt,

which in the state space means the orbit approaches

a point. The point does not move—it is a fixed point—and

since it attracts nearby orbits, it is known as an attractor.

If the pendulum is given a small push, it returns to the

same fixed-point attractor. Any system that comes to

rest with the passage of time can be

characterized by a fixed point in state

space. This is an example of a very

general phenomenon, where losses due

to friction or viscosity, for example,

cause orbits to be attracted to a smaller region

of the state space with lower dimension.

Any such region is called an attractor.

Roughly speaking, an attractor is what the behavior of a system

settles down to, or is attracted to.

Some systems do not come to rest

in the long term but instead cycle periodically

through a sequence of states.

An example is the pendulum clock,

in which energy lost to friction is replaced by a mainspring or weights.

The pendulum repeats the same motion over and over again. In the state

space such a motion corresponds to a

cycle, or periodic orbit. No matter

how the pendulum is set swinging, the

cycle approached in the long-term limit is same. Such attractors are

therefore called limit cycles. Another

familiar system with a limit-cycle attractor is the heart.

A system may have several attractors. If that is the case,

different initial conditions may evolve to different attractors.

The set of points that evolve to an attractor is called its

basin of attraction. The pendulum clock has two

such basins: small displacements of the pendulum from its

rest position result in a return to rest; with large displacements,

however, the clock begins

to tick as the pendulum executes a stable oscillation.

The next most complicated form of

attractor is a torus, which resembles

the surface of a doughnut. This shape

describes motion made up of two independent

oscillations, sometimes called

quasi-periodic motion. (Physical examples can be

constructed from driven electrical oscillators.)

The orbit winds around the torus in state space,

one frequency determined by how fast

the orbit circles the doughnut in the

short direction, the other regulated by

how fast the orbit circles the long

way around. Attractors may also be

higher-dimensional tori, since they

represent the combination of more

than two oscillations.

The important feature of quasi-periodic motion is that in

spite of its complexity it is predictable. Even though

the orbit may never exactly repeat itself, if the

frequencies that make up the motion have no common divisor,

the motion remains regular. Orbits that start on the torus

near one another remain near one another, and long-term

predictability is guaranteed.

ATTRACTORS are geometric forms that characterize long-term

behavior in the state space. Roughly speaking, an attractor is

what the behavior of a system settles down to, or is attracted to.

Here attractors are shown in blue and initial states states in red.

Trajectories (green) from the initial states eventually

approach the attractors. The simplest kind of attractor is a

fixed point (top left). Such an attractor corresponds

to a pendulum subject to friction;

the pendulum always comes to the same rest position, regardless

of how it is started swinging (see right half of illustration

on preceding page). The next most complicated attractor is

a limit cycle (top middle), which forms a closed loop

in the state space. A limit cycle describes stable oscillations,

such as the motion of a pendulum clock and the beating of a heart.

Compound oscillations, or quasi-periodic behavior, correspond to

a torus attractor (top right). All three attractors are

predictable: their behavior can be forecast as accurately as desired.

Chaotic attractors, on the other hand, correspond to unpredictable

motions and have a more complicated geometric form. Three examples

of chaotic attractors are shown in the bottom row; from left to

right they are the work of Edward N. Lorenz, Otto E. Rössler and

one of the authors (Shaw), respectively. The images were prepared

by using simple systems of differential equations having a

three-dimensional state space.

Until fairly recently, fixed points,

limit cycles, and tori were the only

known attractors. In 1963 Edward N.

Lorenz of the Massachusetts Institute

of Technology discovered a concrete

example of a low-dimensional system

that displayed complex behavior.

Motivated by the desire to understand the

unpredictability of the weather, he began

with the equations of motion for

fluid flow (the atmosphere can be considered a fluid),

and by simplifying them he obtained a system that had

just three degrees of freedom. Nevertheless, the

system behaved in an apparently random fashion that could

not be adequately characterized by

any of the three attractors then known.

The attractor he observed, which is

now known as the Lorenz attractor,

was the first example of a chaotic, or

strange, attractor.

Employing a digital computer to

simulate his simple model, Lorenz elucidated

the basic mechanism responsible for the

randomness he observed:

microscopic perturbations are amplified to affect macroscopic behavior.

Two orbits with nearby initial conditions diverge exponentially

fast and so stay close together for only a short

time. The situation is qualitatively different for

nonchaotic attractors. For

these, nearby orbits stay close to one

another, small errors remain bounded

and the behavior is predictable.

The key to understanding chaotic

behavior lies in understanding a simple stretching

and folding operation,

which takes place in the state space.

Exponential divergence is a local feature: because

attractors have finite

size, two orbits on a chaotic attractor

cannot diverge exponentially forever.

Consequently the attractor must fold

over onto itself. Although orbits diverge and

follow increasingly different

paths, they eventually must pass close

to one another again. The orbits on a

chaotic attractor are shuffled by this

process, much as a deck of cards is

shuffled by a dealer. The randomness

of the chaotic orbits is the result of

the shuffling process. The process of

stretching and folding happens repeatedly,

creating folds within folds ad infinitum.

A chaotic attractor is, in other

words, a fractal: an object that reveals

more detail as it is increasingly magnified

[see illustration on page 53].

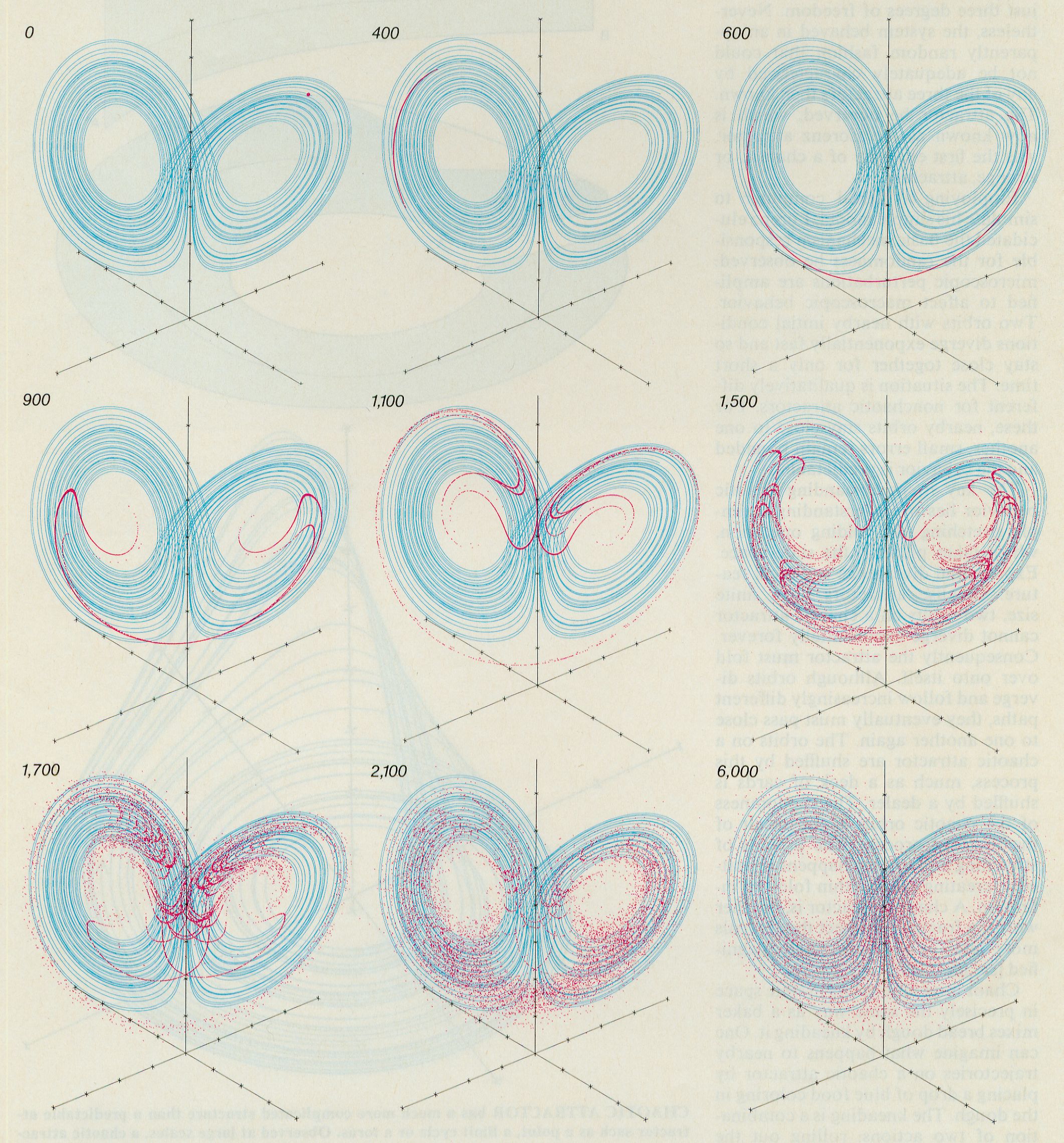

CHAOTIC ATTRACTOR has a much more complicated structure than

a predictable attractor such as a point, a limit cycle, or a torus.

Observed at large scales, a chaotic attractor

is not a smooth surface but one with folds in it.

The illustration shows the steps in

making a chaotic attractor for the simplest case: the Rössler

attractor (bottom). First,

nearby trajectories on the object must “stretch”,

or diverge, exponentially (top), here the

distance between neighboring trajectories roughly doubles.

Second, to keep the object compact, it must “fold”

back onto itself (middle): the surface bends onto

itself so that the two ends meet. The Rössler attractor has

been observed in many systems, from fluid flows

to chemical reactions, illustrating Einstein's maxim

that nature prefers simple forms.

Chaos mixes the orbits in state space

in precisely the same way as a baker

mixes bread dough by kneading it. One

can imagine what happens to nearby

trajectories on a chaotic attractor by

placing a drop of blue food coloring in

the dough. The kneading is a combination

of two actions: rolling out the

dough, in which the food coloring is

spread out, and folding the dough

over. At first the blob of food coloring

simply gets longer, but eventually it is

folded, and after considerable time the

blob is stretched and refolded many

times. On close inspection the dough

consists of many layers of alternating

blue and white. After only 20 steps the

initial blob has been stretched to more

than a million times its original length,

and its thickness has shrunk to the molecular

level. The blue dye is thoroughly mixed with

the dough. Chaos

works the same way, except that instead of mixing dough it mixes the

state space. Inspired by this picture of mixing. Otto E. Rössler

of the University of Tübingen created the simplest

example of a chaotic attractor in a flow

[see illustration on preceding page].

When observations

are made on a physical system, it is impossible to specify

the state of the system exactly owing to the inevitable

errors in measurement. Instead the state of the system is

located not at a single point but rather within a small region

of state space. Although quantum uncertainty

sets the ultimate size of the region, in

practice different kinds of noise limit

measurement precision by introducing

substantially larger errors. The small

region specified by a measurement is

analogous to the blob of blue dye in

the dough.

DIVERGENCE of nearby trajectories is the underlying reason

chaos leads to unpredictability. A perfect measurement would

correspond to a point in the state space, but any real measurement is

inaccurate, generating a cloud of uncertainty. The true state might

be anywhere inside the cloud. As shown here for the Lorenz attractor,

the uncertainty of the initial measurement is represented

by 10,000 red dots, initially so close together that they are

indistinguishable. As each point moves under the action of the

equations, the cloud is stretched into a long, thin thread, which then

folds over onto itself many times, until the points are spread over

the entire attractor. Prediction has now become impossible: the

final state can be anywhere on the attractor. For a predictable

attractor, in contrast, all the final states remain close together.

The numbers above the illustrations are in units of 1/200

second.

Locating the system in a small region

of state space by carrying out a

measurement yields a certain amount

of information about the system. The

more accurate the measurement is,

the more knowledge an observer gains

about the system's state. Conversely,

the larger the region, the more uncertain

the observer. Since nearby points

in nonchaotic systems stay close as

they evolve in time, a measurement

provides a certain amount of information

that is preserved with time. This is

exactly the sense in which such systems

are predictable: initial measurements

contain information that can be

used to predict future behavior. In other words,

predictable dynamical systems are not

particularly sensitive to

measurement errors.

The stretching and folding operation of

a chaotic attractor systematically removes

the initial information

and replaces it with new information:

the stretch makes small-scale uncertainties

larger, the fold brings widely separated

trajectories together and erases large-scale

information. Thus chaotic attractors act as a kind of

pump bringing microscopic fluctuations up to

a macroscopic expression.

In this light it is clear that no exact solution,

no short cut to tell the future,

can exist. After a brief time interval

the uncertainty specified by the initial

measurement covers the entire attractor and

all predictive power is lost:

there is simply no causal connection

between past and future.

Chaotic attractors function locally as noise amplifiers.

A small fluctuation due perhaps to thermal noise will

cause a large deflection in the orbit position soon

afterward. But there is an

important sense in which chaotic attractors differ

from simple noise amplifiers. Because the stretching and

folding operation is assumed to be repetitive and

continuous, any tiny fluctuation will eventually

dominate the motion, and the qualitative behavior is

independent of noise level. Hence chaotic systems

cannot directly be “quieted”,

by lowering the temperature, for example.

Chaotic systems generate randomness on their own

without the need for any external random inputs.

Random behavior comes from more

than just the amplification of errors

and the loss of the ability to predict; it

is due to the complex orbits generated

by stretching and folding.

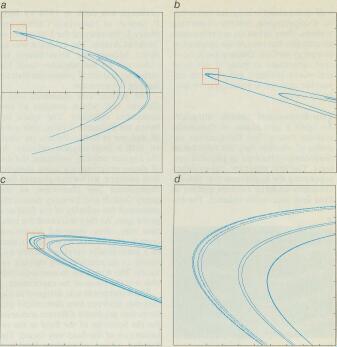

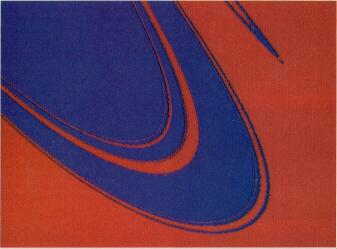

CHAOTIC ATTRACTORS are fractals: objects that reveal more detail

as they are increasingly magnified. Chaos naturally produces fractals.

As nearby trajectories expand they must eventually fold over close

to one another for the motion to remain finite. This is repeated

again and again, generating folds within folds, ad infinitum. As

a result chaotic attractors have a beautiful microscopic structure.

Michel Hénon of the Nice Observatory in France discovered a simple

rule that stretches and folds the plane, moving each point to a

new location. Starting from a single initial point, each successive

point obtained by repeatedly applying Hénon's rule is plotted.

The resulting geometric form (a) provides a simple example of a

chaotic attractor. The small box is magnified by a factor of 10

in b. By repeating the process (f, d) the

microscopic structure of the attractor is revealed in detail.

The bottom illustration depicts another part of the Hénon

attractor.

It should be noted that chaotic as

well as nonchaotic behavior can occur

in dissipationless, energy-conserving

systems. Here orbits do not relax onto

an attractor but instead are confined to

an energy surface. Dissipation is, however,

important in many if not most real-world systems,

and one can expect the concept of attractor to be generally

useful.

Low-dimensional chaotic attractors

open a new realm of dynamical

systems theory, but the question remains of

whether they are relevant to

randomness observed in physical systems.

The first experimental evidence

supporting the hypothesis that chaotic

attractors underlie random motion in

fluid flow was rather indirect.The experiment

was done in 1974 by Jerry

P. Gollub of Haverford College and

Harry L. Swinney of the University of

Texas at Austin. The evidence was indirect

because the investigators focused not on

the attractor itself but rather on statistical

properties characterizing the attractor.

The system they examined was a

Couette cell, which consists of two

concentric cylinders. The space between the

cylinders is filled with a fluid, and one or

both cylinders are rotated with a fixed angular

velocity. As the angular velocity increases,

the fluid shows progressively more complex

flow patterns, with a complicated time

dependence [see illustration on

this page]. Gollub and Swinney essentially

measured the velocity of the fluid at a

given spot. As they increased the rotation rate,

they observed transitions from a velocity that

is constant in time to a periodically varying

velocity and finally to an aperiodically varying

velocity. The transition to aperiodic motion was

the focus of the experiment.

The experiment was designed distinguish between two

theoretical pictures that predicted different scenarios

for the behavior of the fluid as the rotation rate

of the fluid was varied. The Landau picture of

random fluid motion predicted that an ever higher

number of independent fluid oscillations should

be excited as the rotation rate is increased.

The associated attractor would be a high-dimensional

torus. The Landau picture had been challenged by

David Ruelle of the Institut des Hautes Études

Scientifiques near Paris and Floris Takens of the

University of Gröningen in the Netherlands.

They gave mathematical arguments suggesting

that the attractor associated with the Landau

picture would not be likely to occur in fluid

motion. Instead their results suggested

that any possible high-dimensional

tori might give way to a chaotic attractor,

as originally postulated by Lorenz.

Gollub and Swinney found that for

low rates of rotation the flow of the

fluid did not change in time: the underlying

attractor was a fixed point. As the rotation

was increased the water began to oscillate with

one independent frequency, corresponding to a

limit-cycle attractor (a periodic orbit),

and as the rotation was increased still

further the oscillation took on two independent

frequencies, corresponding

to a two-dimensional torus attractor.

Landau's theory predicted that as the

rotation rate was further increased the

pattern would continue: more distinct

frequencies would gradually appear.

Instead, at a critical rotation rate a

continuous range of frequencies suddenly

appeared. Such an observation was consistent

with Lorenz' “deterministic nonperiodic flow,”

lending credence to his idea that chaotic attractors

underlie fluid turbulence.

EXPERIMENTAL EVIDENCE supports

the hypothesis that chaotic attractors underlie some kinds of random

motion in fluid flow. Shown here are successive pictures

of water in a Couette cell, which consists

of two nested cylinders. The space between

the cylinders is filled with water and the

inner cylinder is rotated with a certain angular

velocity (a). As the angular velocity

is increased, the fluid shows a progressively

more complex flow pattern (b), which

becomes irregular (c) and then chaotic

(d).

Although the analysis of Gollub and

Swinney bolstered the notion that

chaotic attractors might underlie some

random motion in fluid flow, their

work was by no means conclusive.

One would like to explicitly demonstrate

the existence in experimental

data of a simple chaotic attractor.

Typically, however, an experiment

does not record all facets of a system

but only a few. Gollub and Swinney

could not record, for example, the entire

Couette flow but only the fluid velocity

at a single point. The task of the

investigator is to “reconstruct”

the attractor from the limited data. Clearly

that cannot always be done; if the attractor

is too complicated, something

will be lost. In some cases, however, it

is possible to reconstruct the dynamics

on the basis of limited data.

A technique introduced by us and

put on a firm mathematical foundation by

Takens made it possible to reconstruct a

state space and look for

chaotic attractors. The basic idea is

that the evolution of any single component

of a system is determined by

the other components with which it

interacts. Information about the relevant

components is thus implicitly

contained in the history of any single

component. To reconstruct an “equivalent”

state space, one simply looks at

a single component and treats the

measured values at fixed time delays

(one second ago, two seconds ago, and

so on, for example) as though they

were new dimensions.

The delayed values can be viewed as

new coordinates, defining a single

point in a multidimensional state

space. Repeating the procedure and

taking delays relative to different

times generates many such points. One

can then use other techniques to test

whether or not these points lie on a

chaotic attractor. Although this representation

is in many respects arbitrary,

it turns out that the important properties of

an attractor are preserved by it

and do not depend on the details of

how the reconstruction is done.

The example we shall use to illustrate the technique

has the advantage of being familiar and accessible

to nearly everyone. Most people are

aware of the periodic pattern of drops

emerging from a dripping faucet. The

time between successive drops can be

quite regular, and more than one insomniac

has been kept awake waiting

for the next drop to fall. Less familiar

is the behavior of a faucet at a somewhat

higher flow rate. One can often

find a regime where the drops, while

still falling separately, fall in a never

repeating patter, like an infinitely inventive

drummer. (This is an experiment easily carried

out personally; the faucets without the little screens

work best.) The changes between periodic and

random-seeming patterns are reminiscent of

the transition between laminar and turbulent

fluid flow. Could a simple chaotic attractor

underlie this randomness?

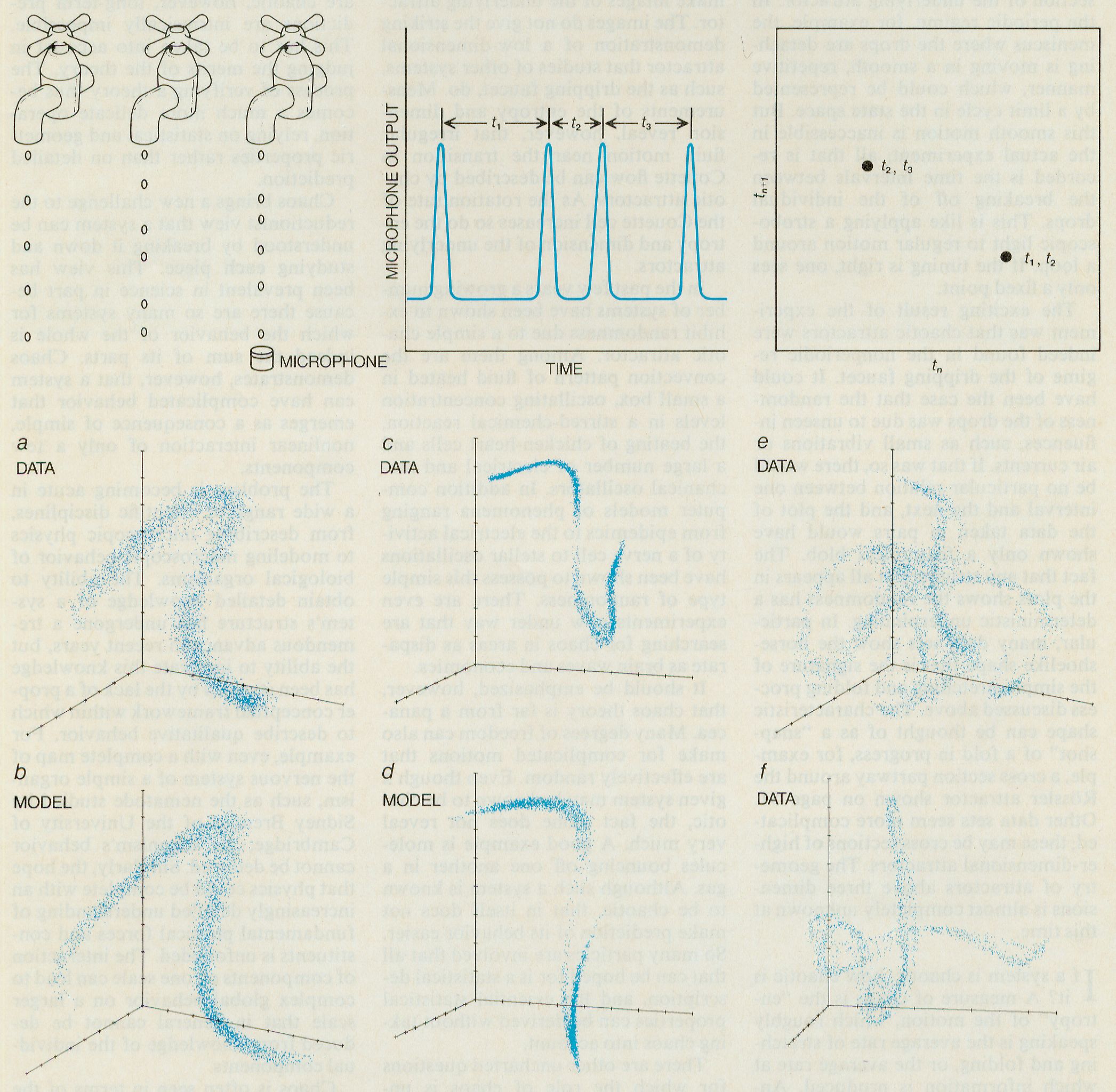

DRIPPING FAUCET is an example of a common system that can

undergo a chaotic transition. The underlying attractor is

reconstructed by plotting the time intervals between successive

drops in pairs, as is shown at the top of the illustration.

Attractors reconstructed from an actual dripping faucet

(a, c) compare favorably with attractors

generated by following variants of Hénon's rule (b, d).

(The entire Hénon attractor is shown on page 53.) Illustrations

e and f were reconstructed from high rates of water

flow and presumably represent the cross sections of hitherto

unseen chaotic attractors. Time delay coordinates were employed

in each of the plots. The horizontal coordinate is tn,

the time interval between drop n and drop n-1. The

vertical coordinate is the next time interval, tn+1

and the third coordinate, visualized as coming out of the page,

is tn+2. Each point is thus determined by a triplet

of numbers (tn, tn+1, tn+2)

that have been plotted for a set of 4,094 data samples.

Simulated noise was added to illustrations b and

d.

The experimental study of dripping faucet was done

at the University of California at Santa Cruz by one of

us (Shaw) in collaboration with Peter

L. Scott, Stephen C. Pope, and Philip J.

Martein. The first form of the experiment consisted

in allowing the drops

from an ordinary faucet to fall on a

microphone and measuring the time

intervals between the resulting sound

pulses. Typical results from a somewhat more

refined experiment are

shown on the preceding page. By plotting the time

intervals between drops

in pairs, one effectively takes a cross

section of the underlying attractor. In

the periodic regime, for example, the

meniscus where the drops are detaching is moving in

a smooth, repetitive

manner, which could be represented

by a limit cycle in the state space. But

this smooth motion is inaccessible in

the actual experiment; all that is recorded is

the time intervals between

the breaking off of the individual

drops. This is like applying a stroboscopic light

to regular motion around

a loop. If the timing is right, one sees

only a fixed point.

The exciting result of the experiment was that chaotic attractors were

indeed found in the nonperiodic regime of the dripping faucet. It could

have been the case that the randomness of the

drops was due to unseen influences, such as small vibrations or

air currents. If that was so, there would

be no particular relation between one

interval and the next, and the plot of

the data taken in pairs would have

shown only a featureless blob. The

fact that any structure at all appears in

the plots shows the randomness has a deterministic underpinning.

In particular, many data sets show the horse-shoelike shape

that is the signature of the simple stretching and folding

process discussed above. The characteristic shape can be thought

of as a “snapshot” of a fold in progress, for example,

a cross section partway around the Rössler attractor shown on page 51.

Other data sets seem more complicated; these may be cross sections

of higher-dimensional attractors. The geometry of attractors above

three dimensions is almost completely unknown at this time.

If a system is chaotic, how chaotic is it? A measure of chaos is

the “entropy” of the motion, which roughly

speaking is the average rate of stretching and folding,

or the average rate at which information is produced. Another

statistic is the “dimension” of the

attractor. If a system is simple, its behavior should be

described by a low-dimensional attractor in the state

space, such as the examples given in

this article. Several numbers may be

required to specify the state of a more

complicated system, and its corresponding attractor would therefore be

higher-dimensional.

The technique of reconstruction,

combined with measurements of entropy and dimension,

makes it possible to reexamine the fluid flow originally

studied by Gollub and Swinney. This

was done by members of Swinney's

group in collaboration with two of

us (Crutchfield and Farmer). The reconstruction

technique enabled us to

make images of the underlying attractor.

The images do not give the striking

demonstration of a low-dimensional

attractor that studies of other systems,

such as the dripping faucet, do. Measurements

of the entropy and dimension reveal, however, that irregular

fluid motion near the transition in Couette flow

can be described by chaotic attractors. As the rotation rate of

the Couette cell increases so do the entropy and

dimension of the underlying attractors.

In the past few years a growing number of systems

have been shown to exhibit randomness due to a

simple chaotic attractor. Among them are the

convection pattern of fluid heated in

a small box, oscillating concentration

levels in a stirred-chemical reaction,

the beating of chicken-heart cells, and

a large number of electrical and mechanical

oscillators. In addition computer models of phenomena ranging

from epidemics to the electrical activity of a nerve

cell to stellar oscillations have been shown to

possess this simple type of randomness. There are even

experiments now under way that are searching for

chaos in areas as disparate as brain waves and economics.

It should be emphasized, however,

that chaos theory is far from a panacea.

Many degrees of freedom can also

make for complicated motions that

are effectively random. Even though a

given system may be known to be chaotic,

the fact alone does not reveal very much.

A good example is molecules bouncing off one another in a

gas. Although such a system is known

to be chaotic, that in itself does not

make prediction of its behavior easier.

So many particles are involved that all

that can be hoped for is a statistical

description, and the essential statistical

properties can be derived without taking

chaos into account.

There are other uncharted questions

for which the role of chaos is unknown.

What of constantly changing

patterns that are spatially extended,

such as the dunes of the Sahara and

fully developed turbulence? It is not

clear whether complex spatial patterns

can be usefully described by a single

attractor in a single state space. Perhaps,

though, experience with the simplest

attractors can serve as a guide to

a more advanced picture, which may

involve entire assemblages of spatially

mobile deterministic forms akin to

chaotic attractors.

The existence of chaos affects the

scientific method itself. The classic

approach to verifying a theory is to

make predictions and test them against

experimental data. If the phenomena

are chaotic, however, long-term

predictions are intrinsically impossible.

This has to be taken into account in

judging the merits of the theory. The

process of verifying a theory thus

becomes a much more delicate operation,

relying on statistical and geometric

properties rather than on detailed prediction.

Chaos brings a new challenge to the

reductionist view that a system can be

understood by breaking it down and

studying each piece. This view has

been prevalent in science in part

because there are so many systems for

which the behavior of the whole is

indeed the sum of its parts. Chaos

demonstrates, however, that a system

can have complicated behavior that

emerges as a consequence of simple,

nonlinear interaction of only a few

components.

The problem is becoming acute in

a wide range of scientific disciplines,

from describing microscopic physics

to modeling macroscopic behavior of

biological organisms. The ability to

obtain detailed knowledge of a system's

structure has undergone a tremendous

advance in recent years, but

the ability to integrate this knowledge

has been stymied by the lack of a proper

conceptual framework within which

to describe qualitative behavior. For

example, even with a complete map of

the nervous system of a simple organism,

such as the nematode studied by

Sidney Brenner of the University of

Cambridge, the organism's behavior

cannot be deduced. Similarly, the hope

that physics could be complete with an

increasingly detailed understanding of

fundamental physical forces and constituents

is unfounded. The interaction

of components on one scale can lead to

complex global behavior on a larger

scale that in general cannot be deduced

from knowledge of the individual components.

Chaos is often seen in terms of the

limitations it implies, such as lack of

predictability. Nature may, however,

employ chaos constructively. Through

amplification of small fluctuations it

can provide natural systems with access to

novelty. A prey escaping a

predator's attack could use chaotic

flight control as an element of surprise

to evade capture. Biological evolution demands

genetic variability; chaos provides a means of

structuring random changes, thereby providing

the possibility of putting variability

under evolutionary control.

Even the process of intellectual

progress relies on the injection of new

ideas and on new ways of connecting

old ideas. Innate creativity may have

an underlying chaotic process that

selectively amplifies small fluctuations

and molds them into macroscopic coherent

mental states that are experienced

as thoughts. In some cases the

thoughts may be decisions, or what are

perceived to be the exercise of will. In

this light, chaos provides a mechanism

that allows for free will within a world

governed by deterministic laws.

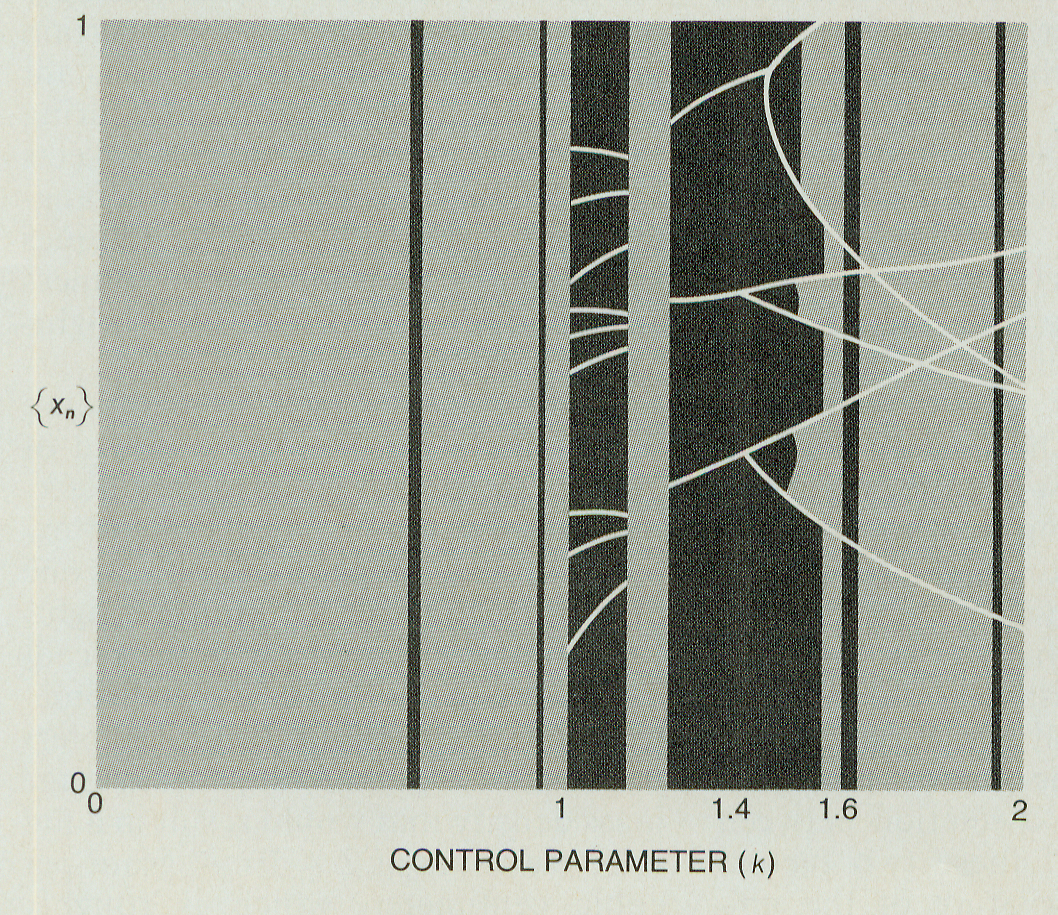

TRANSITION TO CHAOS is depicted schematically by means

of a bifurcation diagram: a plot of a family of attractors

(vertical axis) versus a control parameter

(horizontal axis). The diagram was generated by a

simple dynamical system that maps one number to another.

The dynamical system used here is called a circle

map, which is specified by the iterative equation

xn+1 = w + xn + k/2

π sin(2 π xn ). For each

chosen value of the control parameter k a computer

plotted the corresponding attractor. The colors encode the

probability of finding points on the attractors: red

corresponds to regions that are visited frequently,

green to regions that are visited less frequently, and

blue to regions that are rarely visited. As k is

increased from 0 to 2 (see drawing at left), the

diagram shows two paths to chaos: a quasi-periodic route

(from k = 0 to k = 1, which corresponds

to the green region above) and a “period doubling”

route (from k = 1.4 to = 2). The quasi-periodic

route is mathematically equivalent to a path that passes

through a torus attractor. In the period-doubling route, which is

based on the limit-cycle attractor, branches appear in pairs,

following the geometric series 2, 4, 8, 16, 32, and so on. The

iterates oscillate among the pairs of branches. (At a particular

value of k—1.6, for instance—the iterates visit only

two values.) Ultimately, the branch structure becomes so fine

that a continuous band structure emerges: a threshold is

reached beyond which chaos appears.